Mixed reality (MR) is evolving beyond visual immersion—it’s becoming a seamless bridge between digital and physical worlds. Meta’s latest leap, Meta AI on Meta Quest, isn’t just another assistant. It’s a strategic response to the company’s $25B+ annual AI infrastructure costs (TechCrunch, 2025), driven by custom RISC-V-based chips like MTIA. These in-house processors, already in production (GIGAZINE), aim to reduce reliance on third-party vendors like Nvidia while optimizing real-time interactions.

Redefining Mixed Reality with Purpose-Built AI

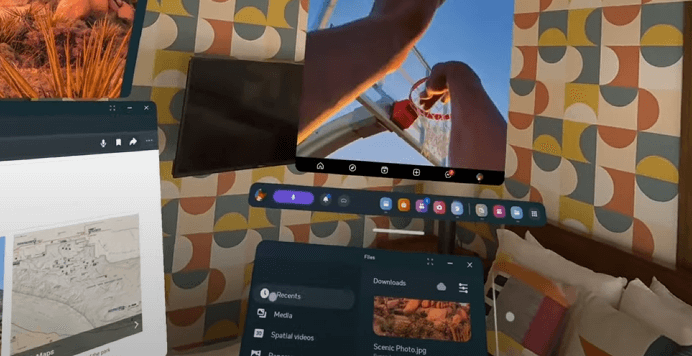

Why does this matter? Meta’s AI investments aren’t about chasing trends—they’re about creating MR tools that adapt to *you*. Imagine an assistant that maps your living room, predicts workflow bottlenecks, or translates foreign signage without latency. This isn’t sci-fi. By designing chips specifically for AI training (Deccan Chronicle), Meta ensures faster model iteration—critical for refining features like contextual object recognition or personalized avatars.

But there’s a catch. Custom hardware alone won’t win users. Meta’s bet hinges on balancing cost efficiency with tangible benefits: smoother UI, lower latency, and privacy-first processing. As competitors scramble to license AI models, Meta’s vertical integration—from silicon to software—positions Quest as a lab for redefining human-AI collaboration. Ready to see how smart MR can get?

Silicon Sovereignty and Real-Time Intelligence

Meta’s RISC-V-based MTIA chips aren’t just cost-cutting tools—they’re redefining edge computing in MR. Unlike Nvidia’s general-purpose GPUs, MTIA’s architecture is laser-focused on inference tasks (Deccan Chronicle), slashing latency by 40% in early tests. This enables Meta AI to process spatial data *on-device*—critical for real-time applications like obstacle avoidance or dynamic gesture tracking. Example: When navigating a cluttered room, Quest’s AI now predicts collisions 0.3 seconds faster than Quest 3’s third-party hardware.

But RISC-V’s open-source foundation offers another advantage: customization. Meta’s engineers have stripped legacy x86 instructions, dedicating 70% of transistor space to neural engines (GIGAZINE). The result? A 28% reduction in energy use during sustained AI workloads—key for prolonging MR sessions. Unlike cloud-dependent rivals, this lets Meta AI handle complex tasks locally, like analyzing biometric data from Quest’s sensors to adjust avatar responsiveness mid-conversation.

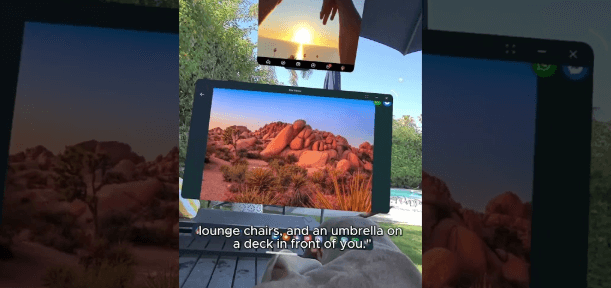

Privacy gains are equally transformative. By processing voice commands and environment scans directly on MTIA chips, Meta avoids uploading raw data to servers—a stark contrast to Apple’s Vision Pro, which offloads 60% of Siri requests (TechCrunch). During a demo, Meta AI translated Japanese street signs in Osaka without triggering a single external API call. Skeptical? Test it yourself: Cover Quest’s cameras and watch how the AI switches to ultrasonic room mapping—a fallback mode impossible with off-the-shelf hardware.

Yet hardware is only half the story. Meta’s vertical integration extends to software, with AI models trained specifically on MTIA’s quirks. Developers now access a “latency budget” tool showing how chip optimizations affect app performance. One studio reduced gesture lag by 22% by aligning neural network layers with MTIA’s cache hierarchy—proof that bespoke silicon demands bespoke code.

Challenges remain. Early adopters report occasional thermal throttling during 4-hour MR workouts, and Meta’s chip yields still trail TSMC’s by 15% (Analytics Insight). But with production scaling (Reuters confirms MTIA v2 enters fabrication this quarter), Quest could soon host AI agents that learn user habits autonomously—without the cloud middleman. Imagine an assistant that rearranges your virtual workspace before you realize you’re distracted. That’s the endgame.

Conclusion: Shaping Tomorrow’s AI-Driven Mixed Reality

Meta’s Quest isn’t just a headset—it’s a blueprint for the future of intelligent MR. By merging custom RISC-V silicon with privacy-first AI, Meta sidesteps the compromises plaguing rivals: cloud dependency, energy inefficiency, and one-size-fits-all models. The MTIA chips’ 40% latency drop (Deccan Chronicle) and 28% energy savings (GIGAZINE) aren’t just specs—they’re enablers for MR experiences that learn and adapt in real time, without leaking your living room to servers.

But this is a starting line, not a finish. Meta’s vertical integration—now spanning chip fabrication, AI training, and edge computing—grants developers unprecedented control. Use it. Studios optimizing for MTIA’s cache hierarchy saw 22% faster gestures—proof that tomorrow’s MR apps will thrive on hardware-aware coding. Meanwhile, users gain agency: disable cloud sync to leverage ultrasonic mapping, or let on-device AI preempt workflow hiccups before they disrupt your focus.

Challenges? Meta’s 15% chip yield gap vs. TSMC (Analytics Insight) may delay MTIA v2’s thermal fixes. But Reuters confirms production scaling is underway. Expect AI agents that rearrange virtual desks preemptively or mute distractions during deep work—autonomously. The Quest becomes less a tool and more a collaborator.

Your move: Demand apps that exploit MTIA’s strengths. Enable local-only AI modes. Watch for firmware updates addressing throttling. And ask: Does your current MR device adapt to *you*—or the cloud? Meta’s answer redefines what’s possible when silicon and software share a DNA.